13/11/2025RAG Evaluation: Using Claude Skills to craft a truly challenging Q&A set

Table of Contents

- Why build a custom dataset

- What we tried and what we learned

- Our approach

- Dataset structure (char-based, chunk-agnostic)

- Question generation

- Easy

- Medium

- Answer and passage generation

- Easy

- Medium (multi-hop and wide)

- Public dataset: D&D SRD 5.2.1

- Current limitations and future improvements

- Conclusions and next steps

Why build a custom dataset

If you want to test a RAG system—or even just a single component like retrieval or generation—you need datasets that reflect real-world challenges. Precise metrics give you results that tell you not just whether one solution beats another, but when and why it works better. We specifically wanted to stress-test:

- Multi-hop queries with many steps, where answers require chaining information across multiple sources

- Wide questions that span multiple documents and heterogeneous sources

- Standardized, agnostic structure that lets us test different chunking and preprocessing strategies

What we tried and what we learned

We explored several public datasets:

- LegalBench: A collaborative benchmark for LLM legal reasoning across dozens of heterogeneous tasks (statutes, contracts, case law, etc.), with varying difficulty levels and contributions from the legal community. Overview

- MultiHop-RAG: A dataset for evaluating RAG on multi-hop questions that require retrieval and reasoning across multiple documents; includes thousands of queries with evidence distributed across 2–4 documents. GitHub · Hugging Face

- LoCoMo: A benchmark for memory and reasoning in long-term conversations (multi-session, sometimes multimodal), with annotations for QA and event summarization. GitHub

The pattern we kept seeing: our solutions would perform well on public datasets but deliver disappointing results on client data.

We needed datasets that better aligned with our goals. Public datasets often lack multi-hop queries with many steps and intensive reasoning—exactly what we encounter in production. Most also use chunk-based schemas or coarse-grained annotations, or schemas that vary across datasets, limiting portability.

Our approach

We built two internal evaluation datasets and one public dataset based on D&D SRD 5.2.1. All three have high skill ceilings (multi-hop, reasoning, broad coverage). The key feature: a standardized char-based structure that makes the dataset chunk-agnostic.

Design objectives

- Chunk-agnostic: Character spans work with any splitter

- Reproducible: Stable

start_char/end_charreferences in markdown KB files - Domain expert validation: Quality control with approval, modification, or rejection for every entry

- Controlled difficulty: Easy and medium levels to cover different scenarios and isolate performance characteristics

Dataset structure (char-based, chunk-agnostic)

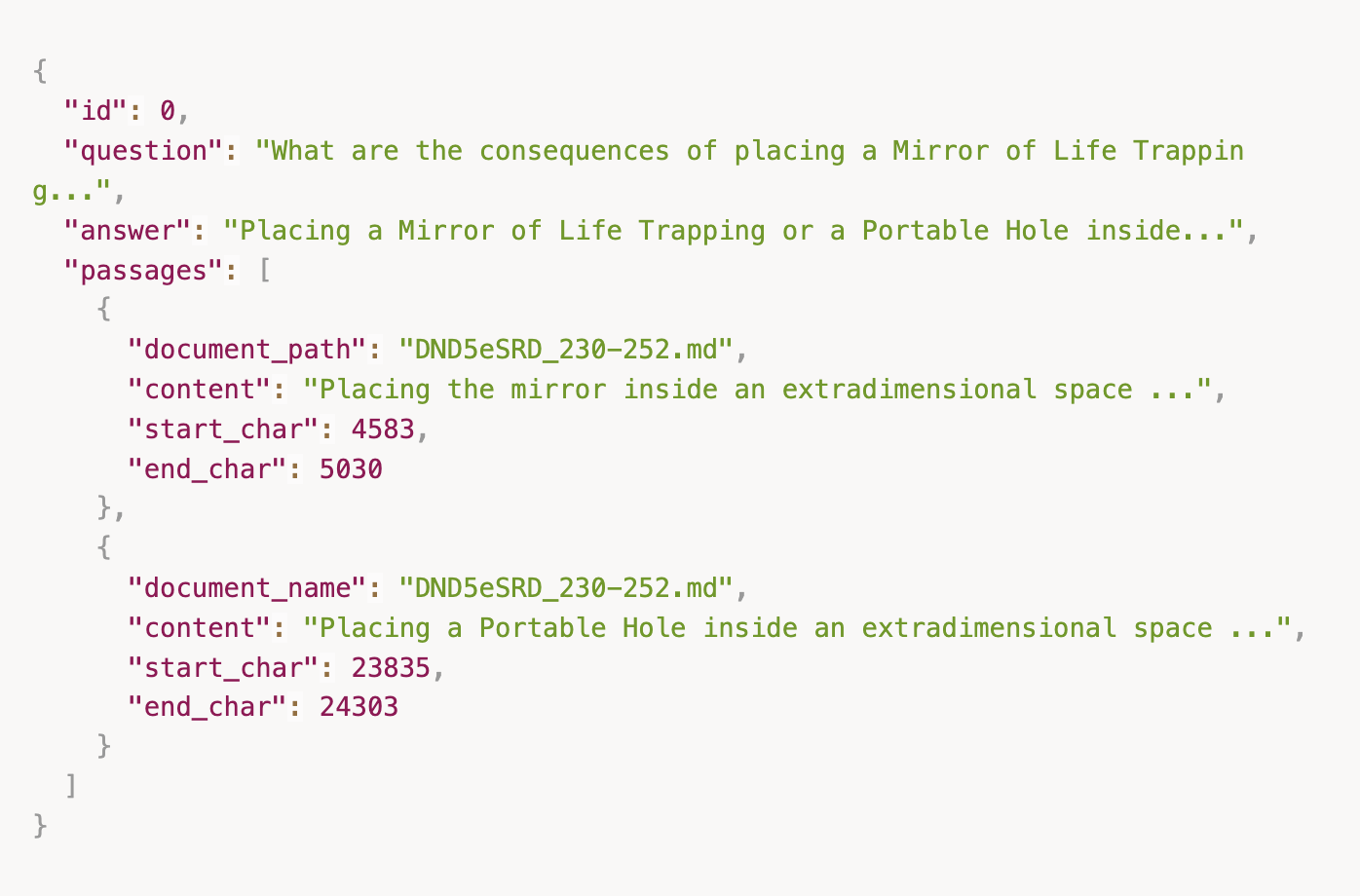

Each dataset item contains:

idquestionanswerpassages: a list of textual evidence, each withdocument_path(markdown file from the KB)start_char,end_char(intervals in the source text)content(the extracted span)

Why char-based? Character intervals are independent of your chunking strategy. Whatever splitter you use downstream, the spans remain valid—you can verify whether a chunk contains the necessary content.

Our KB is a collection of markdown files parsed from PDFs. The start_char/end_char references point to normalized text, maximizing reproducibility and portability.

Question generation

Easy

For easy questions, we automate: randomly select a markdown document from the KB and ask an LLM to generate a focused question about that document. At each iteration, we pass previously generated questions for that file to avoid duplicates.

This produces focused single-document questions, but not cross-document queries.

Medium

Medium questions require domain experts from the start. We want cross-document questions and non-trivial reasoning. Passing the entire KB to an LLM isn't always realistic and tends to produce questions that are too easy or not useful. Here, experts write questions from scratch. We chose D&D because we have expertise in it (we also maintain two internal NDA-covered datasets).

Answer and passage generation

Easy

Given a question and a source document, we call an LLM to produce the answer and identify the necessary passages with their spans. Each question-answer-passages triplet goes through quality control by a human expert who can accept, reject, or correct it.

Medium (multi-hop and wide)

Unlike "easy" questions, where answers can be found in a single document with substantially contiguous text, creating a medium-difficulty dataset required us to imagine scenarios where the necessary information is scattered.

We identified two main scenarios that characterize this medium difficulty: multi-hop questions, where the answer requires a chain of reasoning across multiple documents (for example, first finding a general rule in one document, then a specific exception in another, and finally applying both to a particular case described in a third), and wide questions, where the complete answer requires aggregating information from multiple documents without necessarily following a complex reasoning chain, but rather gathering scattered pieces of information that all contribute to the final answer.

The general generation loop (medium questions only):

- Expert formulates the question and provides initial hints

- System produces answer + passages

- Expert verifies and, if unsatisfied, adds hints (narrow the domain, suggest plausible documents, clarify conditions)

- Repeat until answer and spans are satisfactory

For medium questions, we separated these two problem types, each with its own strategy. In both cases, if the answer isn't satisfactory, we enter an iterative loop of expert suggestions until we reach acceptable quality.

-

Complex multi-hop → Claude Skills: Skills are a tool Anthropic recently introduced in this post. We built a custom skill containing:

- A markdown manual providing a general overview of the documents

- The knowledge base in markdown

- Two Python tools: one to search for specific text patterns in the documents and one to expand context around found matches, allowing retrieval of surrounding content and obtaining information about document structure

The goal was to create a Claude Skill that mimicked the behavior of a highly evolved RAG system, prioritizing answer quality over cost and latency. While these last two variables are hardly acceptable in many applications, they are less critical during dataset creation.The skill operates on our complete knowledge base in markdown (for the D&D dataset, this consists of 20 markdown files extracted from SRD 5.2.1). The domain expert provides a custom-crafted question, and the skill executes the following steps:

- The skill accesses an additional markdown document containing a general overview of the KB—how to interpret it, how to explore it, naming conventions, document structure, etc.

- The skill uses the search tools to explore documents and gather information. This step can be repeated multiple times: if the skill determines additional information is needed, it performs new searches, refines criteria, or explores related documents.

- All information collected during the various search steps is aggregated.

- The skill produces a complete answer based on all gathered information, precisely documenting all passages used.

This approach excels at multi-hop. The costs, though, aren't negligible. Across multiple questions, we saw average costs of ~$2 per question, with peaks up to $11.

-

Wide questions → LLM Retriever: For wide questions, we adopted an approach not based on semantic similarity, as it tends to be a bottleneck for result quality. We therefore chose to use LLMs as passage retrievers, following a technique similar to LlamaIndex's LLM Retriever, which take an entire document in context. This allows us to obtain much more accurate passages, albeit at a significantly higher cost which, as already specified for skills, is not a concern during dataset creation. We didn't use skills for wide questions as the costs would have been excessively high.

For each question, the steps are:

- Invoke an LLM retriever per document in parallel

- Each instance, given the entire document and the question, returns candidate passages for the answer

- An aggregator LLM combines the evidence and formulates the final answer, documenting all passages actually used

This approach is also expensive, but:

- More cost-efficient than Claude Skills for wide questions

- Poor performance on multi-hop questions (only makes a single extraction pass)

We excluded excessively generic or ambiguous questions from the dataset, such as "When can I use ability X instead of Y?". These types of questions tend to generate unsatisfactory answers with both approaches (Claude Skills and LLM Retriever), as they often require contextual interpretation or admit multiple valid answers, making them unsuitable for objective evaluation.

Public dataset: D&D SRD 5.2.1

Using this framework, we built a public dataset based on D&D SRD 5.2.1, which covers a subset of fifth edition D&D content (Player's Handbook, DM Guide, Monster Manual). The SRD is released under Creative Commons, so we can make it public. The dataset follows the structure above, is chunk-agnostic thanks to char-based annotations, and includes both easy and medium questions.

Domain experts perform quality control in three stages:

- Semantic validation: Is the answer correct given the evidence? Is there complete passage coverage?

- Span validation: Are the

start_char/end_charintervals aligned and reproducible in the KB files? - Relevance and difficulty: Does the question actually belong to the intended easy/medium class?

If validation fails, we reject the item or (for medium questions) revise it with additional hints.

Dataset Statistics

- Total Questions: 56

- Easy Tier: 25 questions

- Medium Tier: 31 questions

- Source Documents: 20 markdown files from D&D SRD 5.2.1

- Domain: Tabletop role-playing game rules and mechanics

The complete dataset, along with the code for generation and validation, is publicly available in the project's GitHub repository and on HuggingFace.

Current limitations and future improvements

- Required vs. optional evidence: We don't currently distinguish between required and optional evidence in evaluation. This can penalize systems that find correct answers through alternative paths (e.g., agent-style or complex skills), particularly Claude Skills when it follows non-linear search paths. We plan to improve our passage taxonomy.

- Advanced agentic RAG: We want to experiment with deep research pipelines (multi-phase, verification, self-consistency) to strengthen medium question generation and enrich annotations.

- Hard questions: We're building a system to create a third difficulty tier beyond current medium questions, for evaluating highly complex RAG systems and deep research approaches. These questions will combine both multi-hop and wide characteristics.

- Multimodality: Since the dataset is based on character positions, it doesn't yet evaluate the ability to correctly find relevant images or charts.

Conclusions and next steps

We've presented a practical methodology for building evaluation datasets for RAG pipelines: char-based structure, difficulty levels, semi-automated generation with human oversight, and specialized tools for multi-hop and wide questions. We're releasing a public dataset based on D&D SRD 5.2.1 to enable transparent, reproducible evaluations. The dataset along with the code for generation and validation, is publicly available in the project's GitHub repository and on HuggingFace.

Coming soon: a dedicated post on Anthropic’s Contextual Retrieval, where we'll use these datasets to evaluate improvements over classical retrieval approaches.

Raul Singh - GitHub - LinkedIn - AI R&D Engineer - Datapizza

Ling Xuan “Emma” Chen - GitHub - LinkedIn - AI R&D Engineer - Datapizza

Francesco Foresi - GitHub - LinkedIn - GenAI R&D Team Lead - Datapizza